|

I am a senior Machine Learning Engineer at Oxa (Oxbotica), a prominent self-driving vehicle company, based in Oxford, UK. I work in the Perception team taking a Safety-focused approach and building our safety architecture from the ground up. With software and hardware redundancies, statistical verification, and validation are at the heart of what we build and deploy. I am responsible for building Laser and camera safety networks, which independently predict BEV occupancies and velocities, used for assessing the risk of collisions. For the safety certification of our perception components, I am engaged on a multi-year project, building data pipelines for a large-scale, human-annotated, multi-modal dataset. Previously I worked with Prof. Christian Laugier as a research engineer in his autonomous driving team (Chroma) at Inria Grenoble, France. I worked at the intersection of deep Learning and traditional approaches to computer vision. Our main focus was on sensor fusion using LiDARs, RGB and Event-based cameras for increased robustness. During five years at Inria, I was fortunate to author multiple publications at top robotics and AI conferences and work on a diverse set of domains: 2D and 3D object detection, semantic segmentation, depth estimation, motion forecasting, occupancy grid prediction, collision risk estimation, simulations in CARLA and Gazebo, localization, tracking, and many more applications. I got my master's in Graphics, Vision, and Robotics from ENSIMAG, a prestigious French Grande École in Grenoble, France. My master thesis was supervised by Prof. Christian Wolf, on exploring visual attention mechanisms for 3D space. I am an active reviewer at top robotics conferences including ICRA, IROS, IV, ITSC, ICARCV, and CIS-RAM. |

|

|

Along with my research interests, I hold a strong entrepreneurial spirit, I founded Delice Robotics and helped kickstart Ivlabs. |

|

Anshul Paigwar, Yoann Allardin, Didier Lasserre Samuel Heidmann Incubated at Inria Startup studio, France, Nov 2020 - Nov 2021 website At Délice we built Robotic and AI systems to automate food preparation and vending. The aim was to make quality food more affordable and available to everyone. I worked on multiple facets including project ideation, fundraising (100K euros), team building, prototyping, business model, customer need understanding, product demos & presentation. |

|

Rohan Thakker, Ajinkya Kamat, Anshul Paigwar, Sai Teja Manchukanti, Akash Singh, Prasad Vagdargi, Manish Maurya, Manish Saroya, and with many other great minds. Student's Robotics and AI lab at VNIT, Nagpur India website / publications / project videos / code base I helped kickstart IvLabs! It has grown to be among the top robotics labs in India with over 100 active members. I regularly mentor and manage student projects at IvLabs as my responsibility to impart knowledge and give back to the community. |

|

I'm interested in computer vision, machine learning, and image processing. Much of my research is about inferring the dynamic environment around robot from images and point cloud. Representative papers are highlighted. |

|

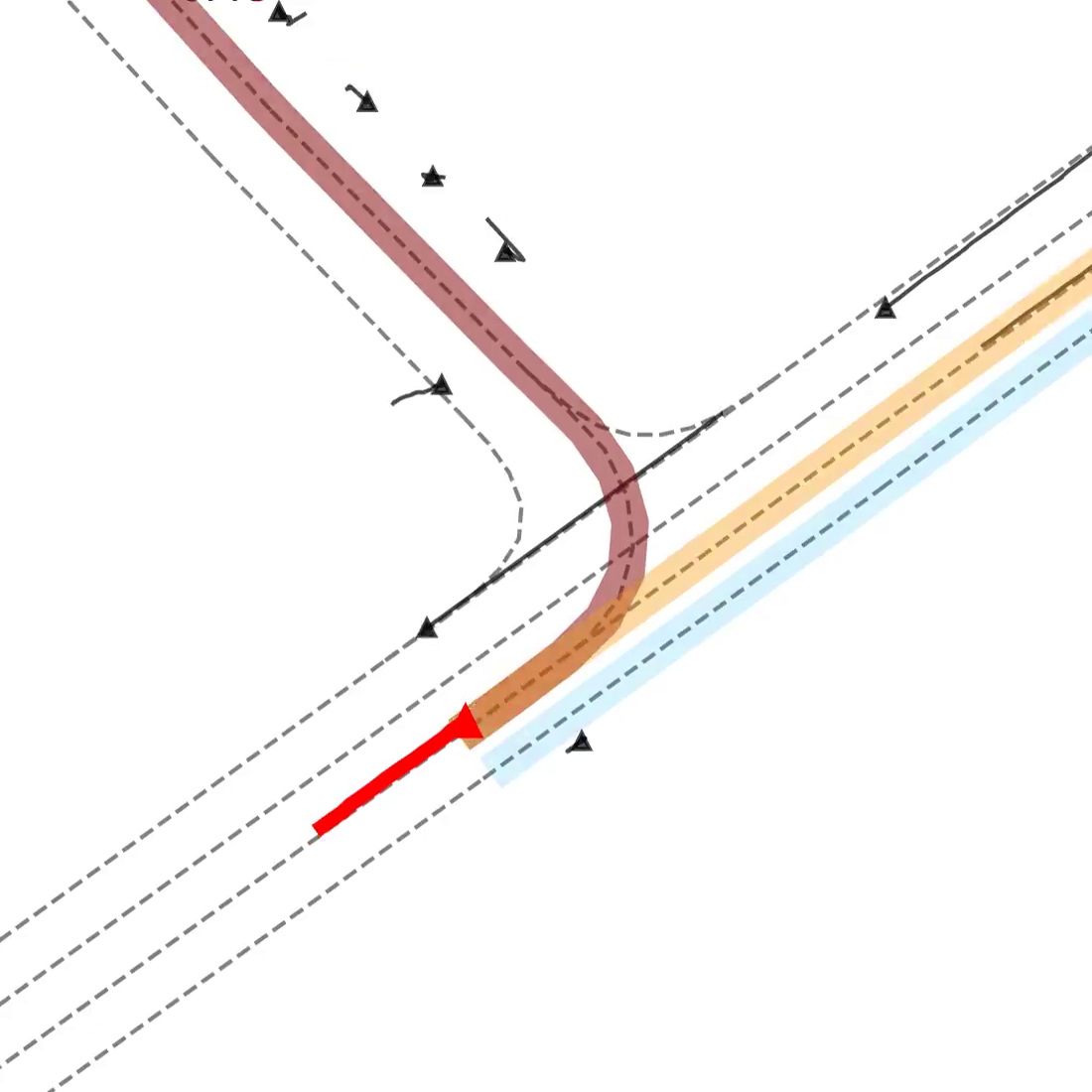

David Sierra-Gonzalez, Anshul Paigwar, Ozgur Erkent, Christian Laugier IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), 2022. A Graph Neural Network is used to predict the centerline that the target intends to follow. Based on that, we predict a distribution over potential endpoints, and multiple lane-oriented trajectory realizations. |

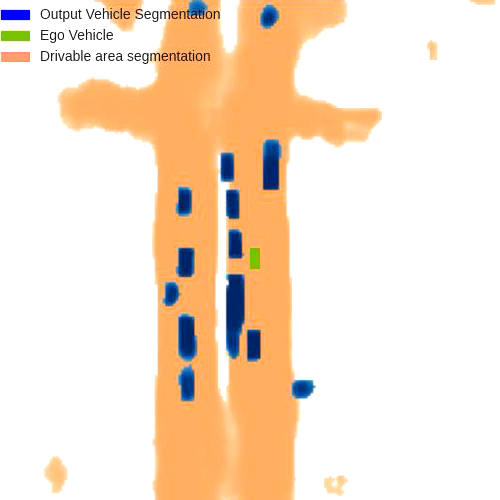

|

Gustavo Salazar-Gomez, David Sierra-Gonzalez, Manuel Diaz, Anshul Paigwar, Wenqian Liu, Ozgur Erkent, Christian Laugier ICARCV 2022 - 17th International Conference on Control, Automation, Robotics and Vision, Dec 2022, Singapore. pdf / code / presentation video Architecture that fuses multi-camera and LiDAR data at different scales to produce bird's eye view semantic grids of the environment. |

|

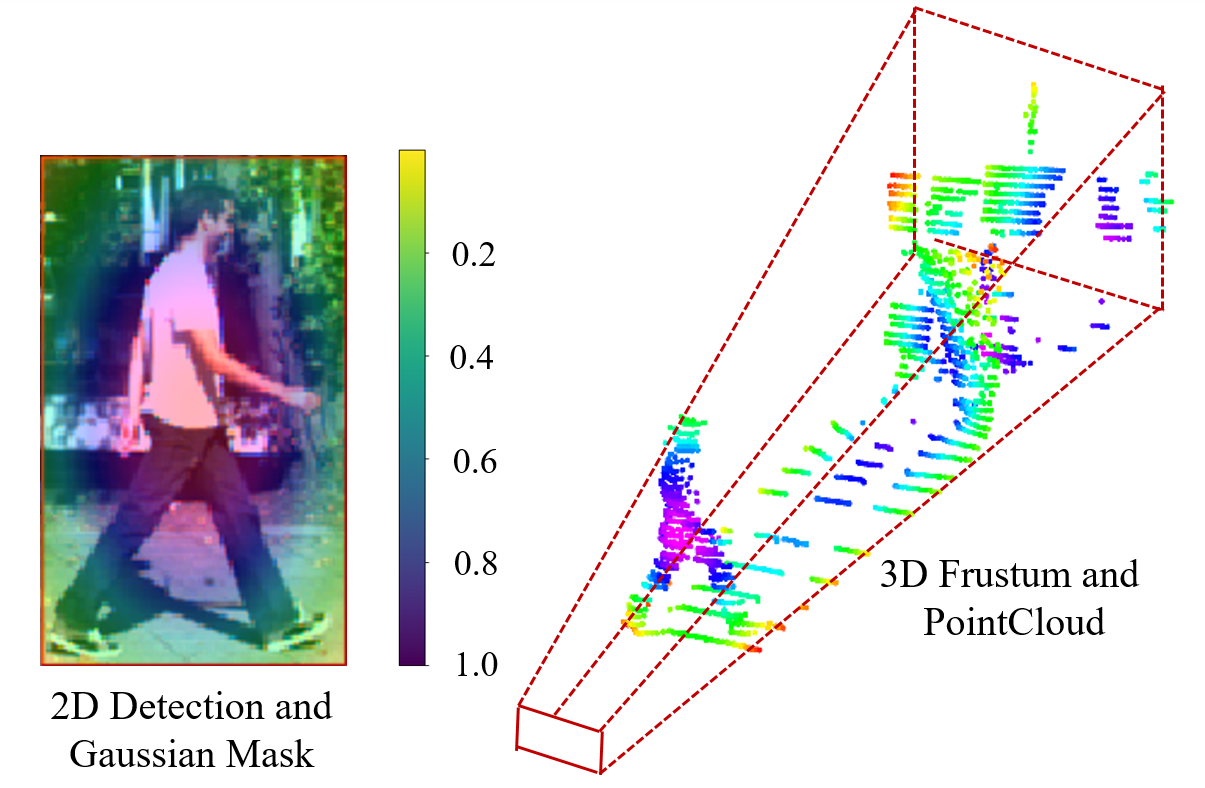

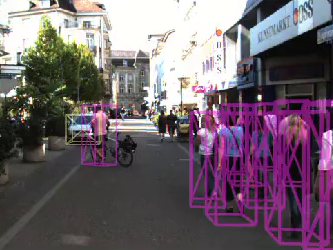

Anshul Paigwar, David Sierra-Gonzalez, Özgür Erkent, Christian Laugier IEEE/CVF International Conference on Computer Vision (ICCV), 2021, Workshop on Autonomous Vehicle Vision pdf / code / presentation video / results video We leverage 2D object detection to reduce the search space in the 3D. Then use the Pillar Feature Encoding network for object localization in the reduced point cloud. At the time of submission F-Pointpillars ranked among top 5 approches for BEV pedestrian detection on KITTI Dataset. |

|

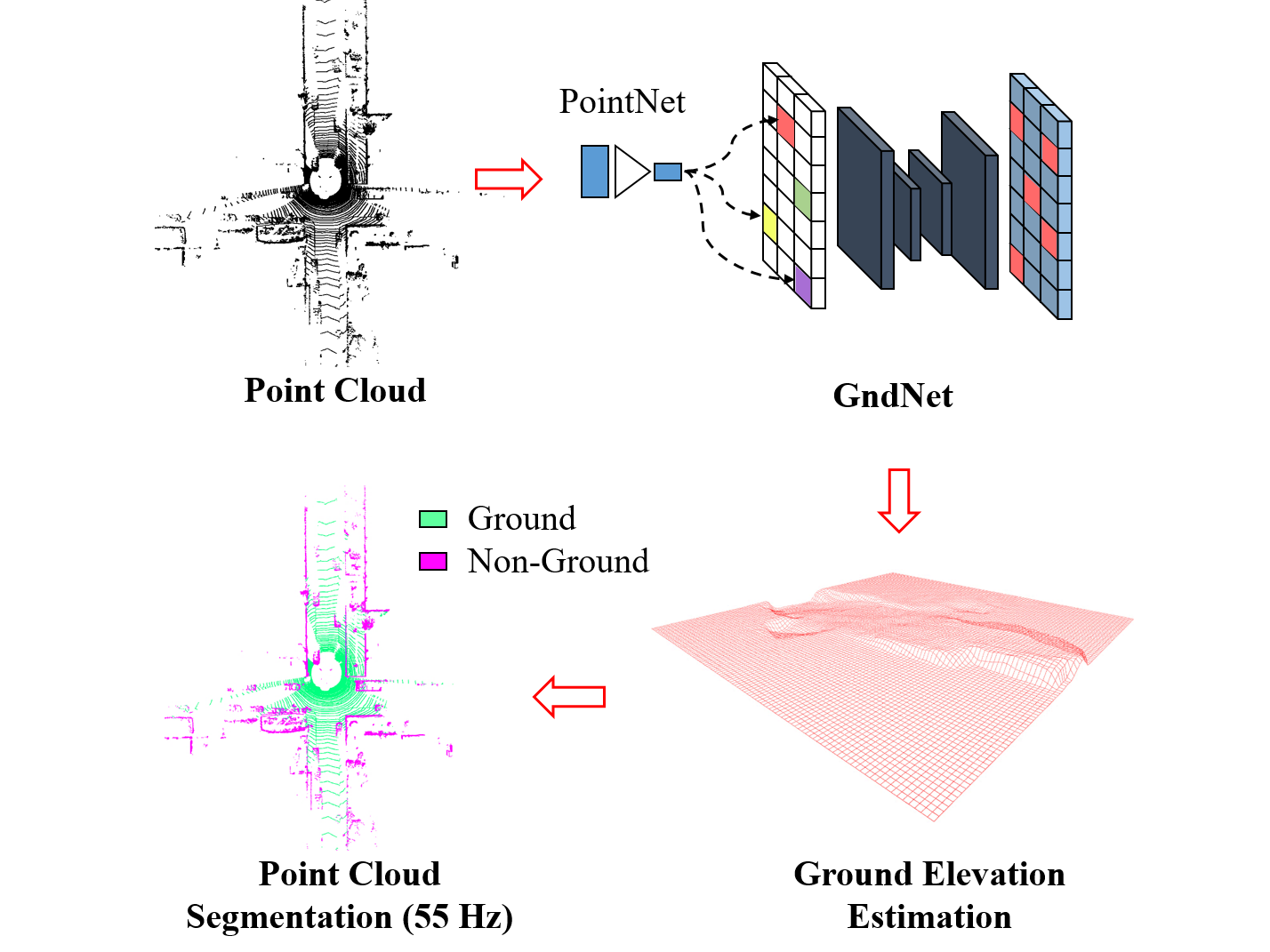

Anshul Paigwar, Özgür Erkent, David Sierra-Gonzalez, Christian Laugier IEEE International conference on Robotic Systems (IROS), 2020 pdf / code / presentation video / results video GndNet uses PointNet and Pillar Feature Encoding network to extract features and regresses ground height for each cell of the grid. GndNet establishes a new state-of-the-art, achieves a run-time of 55Hz for ground plane estimation and ground point segmentation. |

|

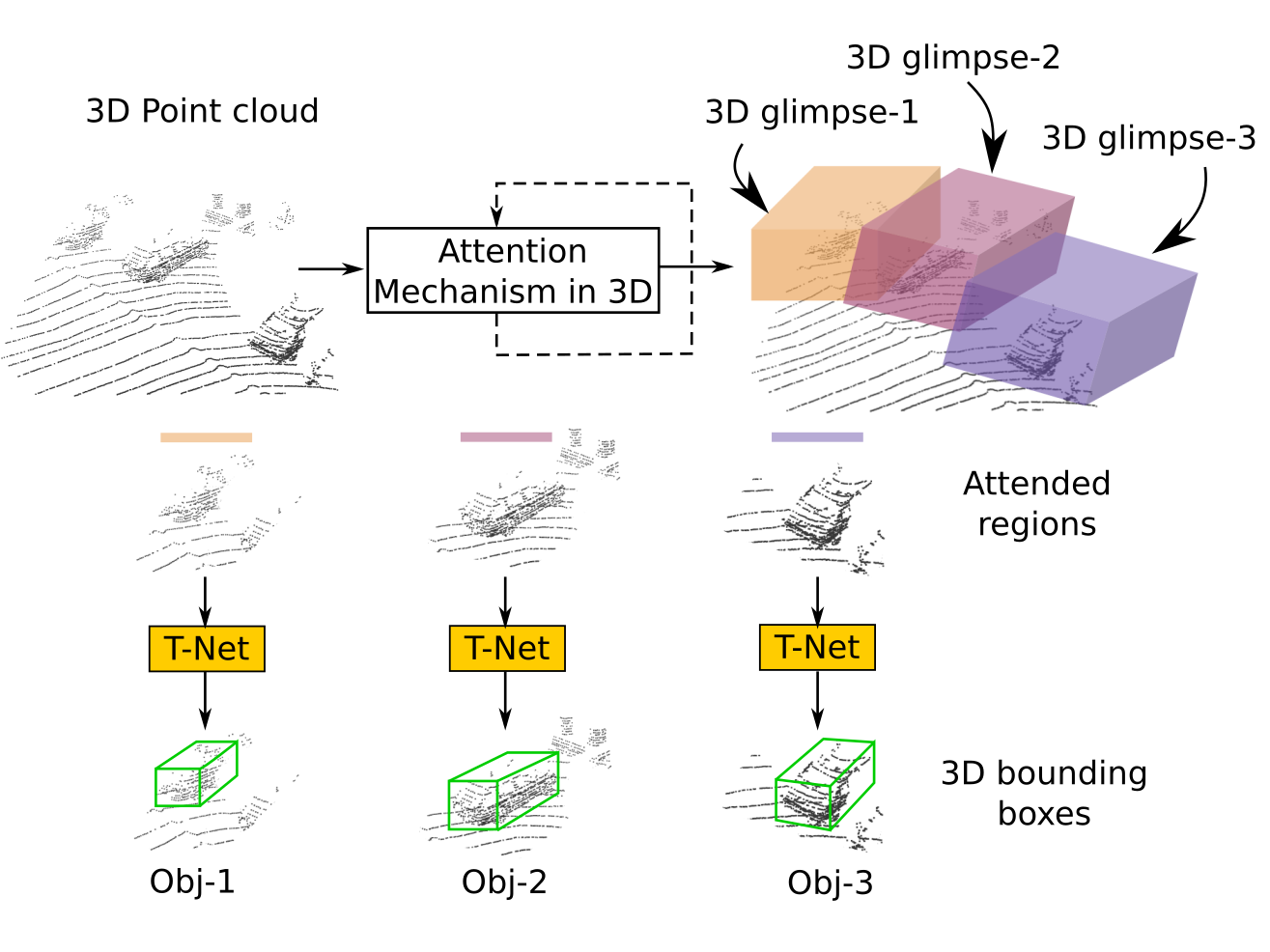

Anshul Paigwar, Özgür Erkent, Christian Wolf, Christian Laugier IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, Workshop on Autonomous Driving pdf / code / poster We extend the theory of visual attention mechanisms to 3D point clouds and introduce a new recurrent 3D Localization Network module. Rather than processing the whole point cloud, the network learns where to look (finding regions of interest), which significantly reduces the number of points to be processed and inference time. |

|

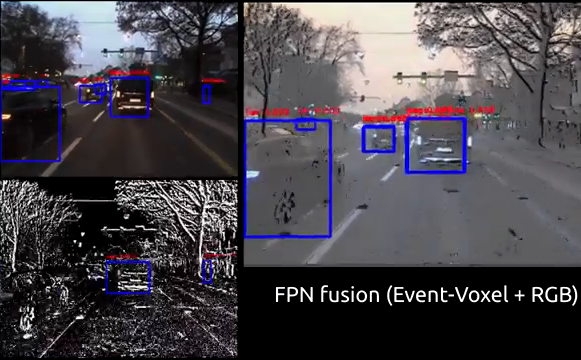

Abhishek Tomy, Anshul Paigwar, Khushdeep Singh Mann, Alessandro Renzaglia, Christian Laugier IEEE International Conference on Robotics and Automation (ICRA), 2022, Philadelphia, United States. pdf / code / results video We propose a redundant sensor fusion model of event-based and frame-based cameras that is robust to common image corruptions. Our sensor fusion approach is over 30% more robust to corruptions compared to only frame-based detections and outperforms the only event-based detection. |

|

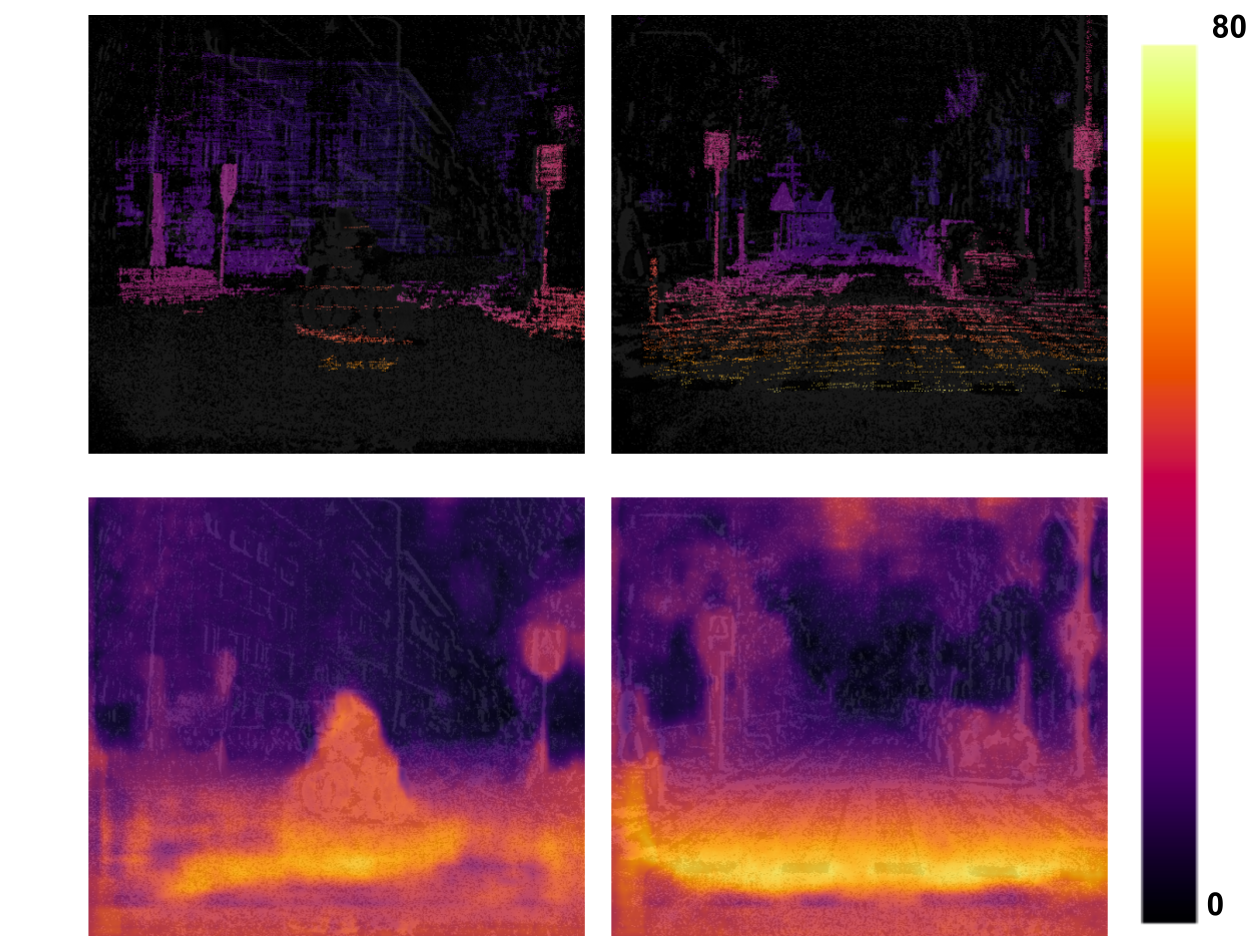

Abhishek Tomy, Anshul Paigwar, Alessandro Renzaglia, Christian Laugier IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, Workshop on Event-based Vision pdf / code STADIE-Net takes advantage of stagewise refinement and prediction of disparity using events from 2 neuromorphic cameras in a stereo setup. The method utilizes voxel grid representation for events as input and proposes a 4 stage network going from coarse to finer disparity prediction. |

|

Khushdeep Singh Mann, Abhishek Tomy, Anshul Paigwar, Alessandro Renzaglia, Christian Laugier IEEE Intelligent Vehicles Symposium (IV), 2022, Aachen, Germany We propose a spatio-temporal prediction network pipeline that takes the past information from the environment and semantic labels separately to predict occupancy for a longer horizon of 3 seconds and in a relatively complex environment. |

|

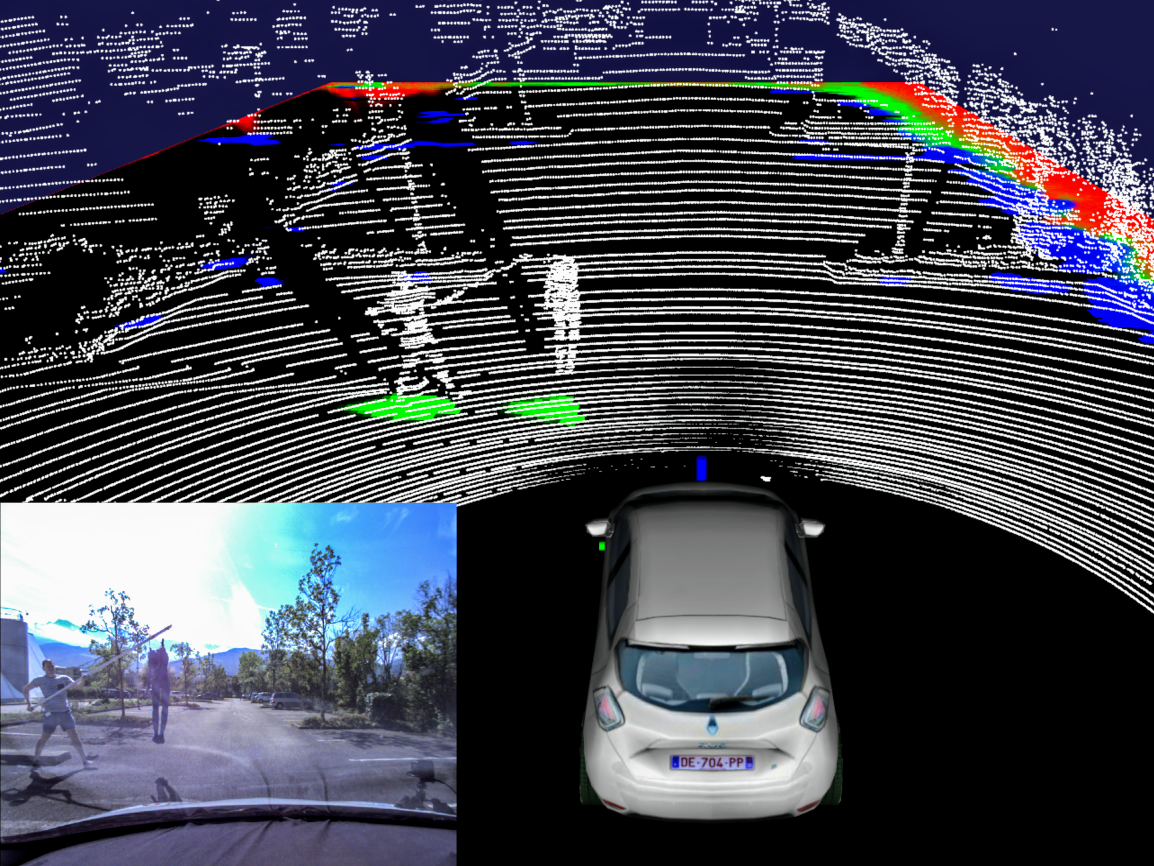

Unmesh Patil, Alessandro Renzaglia, Anshul Paigwar, Christian Laugier IEEE International Conference on Advanced Robotics (ICAR), 2021 pdf / code / video We propose new probabilistic models to obtain Stochastic Reachability Spaces for vehicles and pedestrians detected in the scene. We then exploit these probabilistic predictions of the road-users' future positions, along with the expected ego-vehicle trajectory, to estimate the probability of collision risk in real-time. |

|

Anshul Paigwar, Eduard Baranov Alessandro Renzaglia, Christian Laugier, Axel Legay IEEE Intelligent Vehicles Symposium (IV), 2020 pdf / video We present a Statistical Model Checking (SMC) approach to validate the collision risk assessment generated by a probabilistic perception system. SMC represents an intermediate between test and exhaustive verification by relying on statistics and evaluates the probability of (KPIs) based on a large number of simulations. |

|

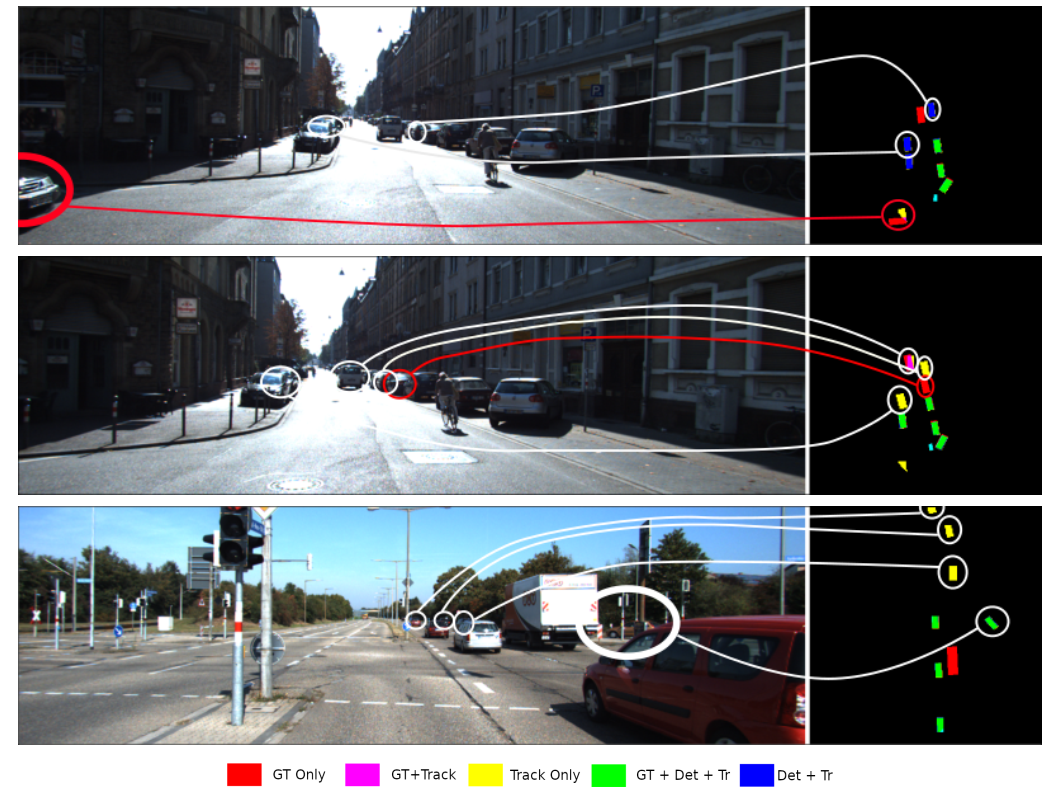

Özgür Erkent, David Sierra-Gonzalez, Anshul Paigwar, Christian Laugier International Conference on Computer Vision Systems (ICVS), 2021 We fuse a dynamic occupancy grid map (DOGMa) with an object detector. DOGMa is obtained by applying a Bayesian filter on raw sensor data. This improves the tracking of the partially observed / unobserved objects with the help of the Bayesian filter on raw data. |

|

David Sierra-Gonzalez, Anshul Paigwar, Özgür Erkent, Christian Laugier IEEE International Conference on Control, Automation, Robotics and Vision (ICARCV), 2020 pdf / code / dataset we use dynamic occupancy grid maps to exploit any dynamic information from the driving sequences for 3D object detection. Our results show that having access to the environment dynamics improves by 27% the ability of the detection algorithm to predict the orientation of smaller obstacles such as pedestrians. |

|

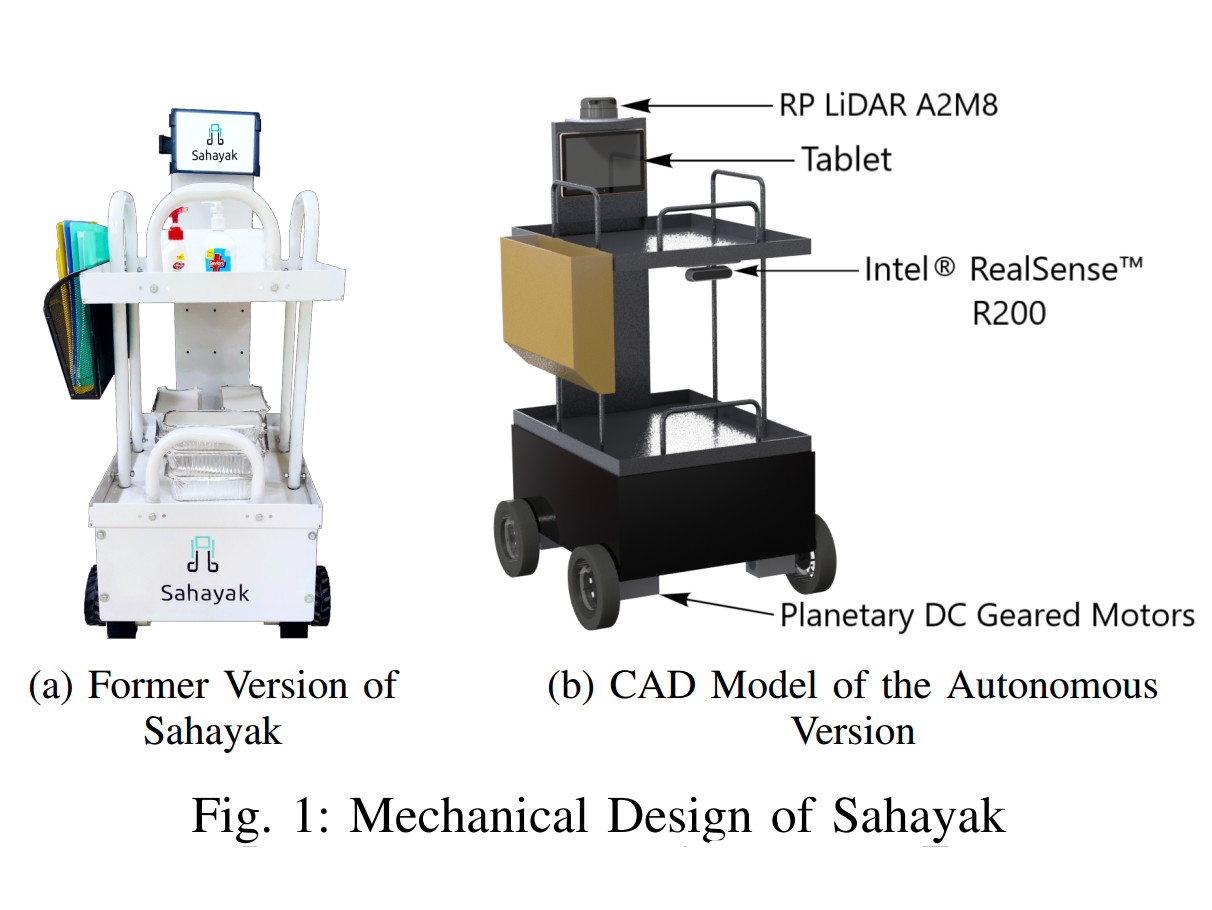

Karthik Raman, Prathamesh Ringe, Sania Subhedar, Sushlok Shah, Yagnesh Devada, Aayush Fadia, Kushagra Srivastava, Varad Vaidya, Harshad Zade, Ajinkya Kamat, Anshul Paigwar, Shital Chiddarwar IEEE International Symposium on Medical Robotics (ISMR), 2021, poster submission pdf / project page / video We propose a general framework for developing medical assistive robots capable of delivering food and medicine to patients and facilitating teleconferencing with doctors. Autonomous mobility of robot is validated in simulated environments, while a teleoperated prototype was deployed at AIIMS Nagpur, India. |

|

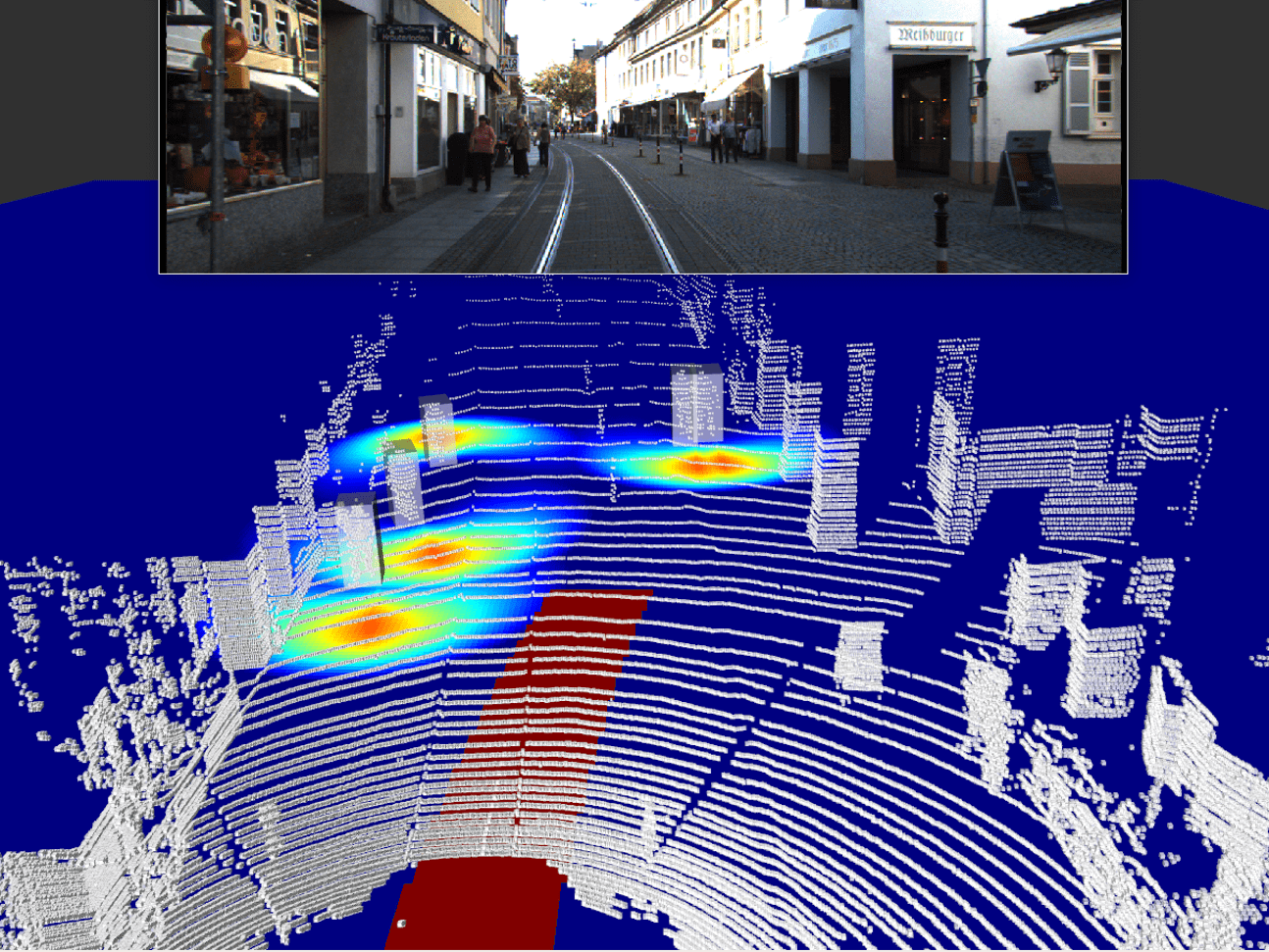

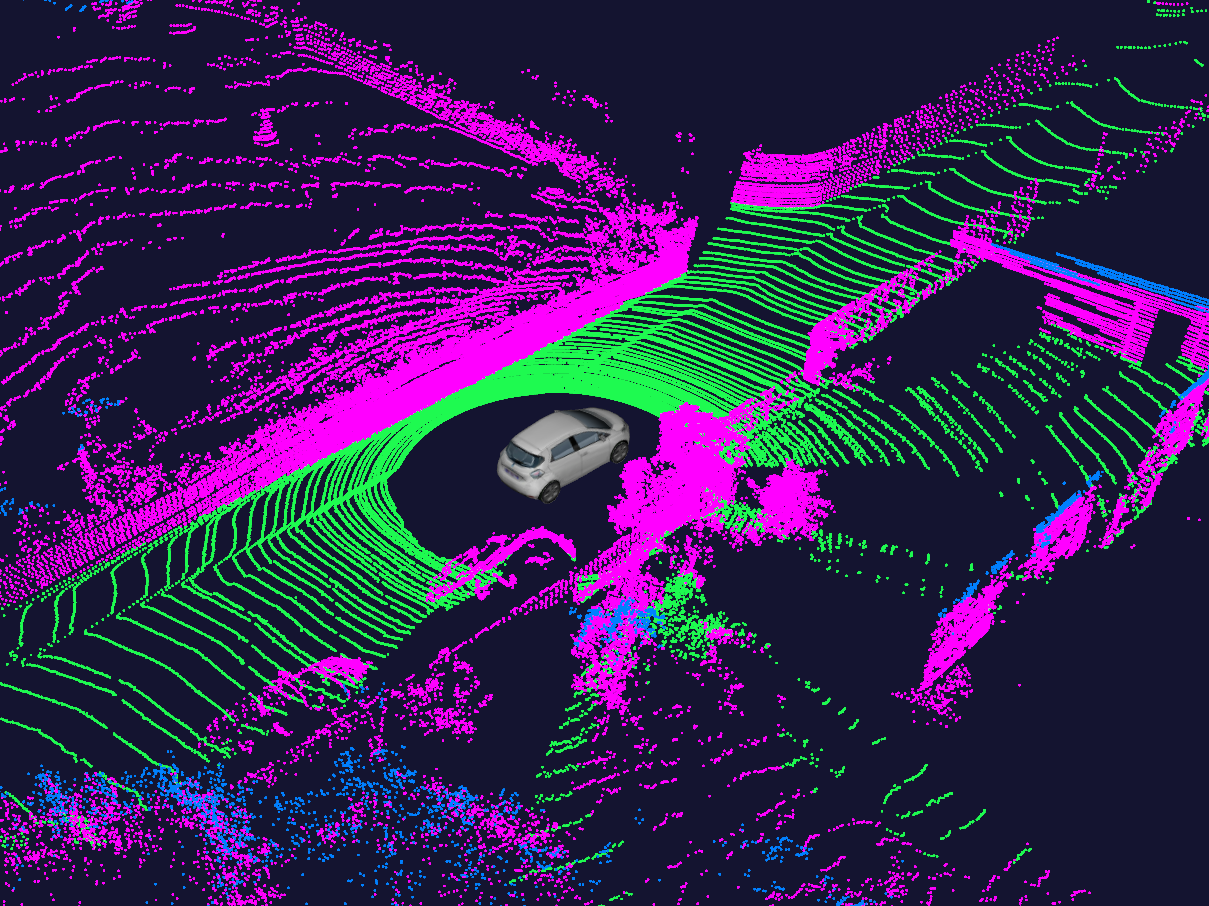

Lukas Rummelhard, Anshul Paigwar, Amaury Negre, Christian Laugier IEEE Intelligent Vehicles Symposium (IV), 2017 pdf / video We propose a Spatio-Temporal Conditional Random Field (STCRF) method for ground modeling and labeling in 3D Point clouds, based on a local ground elevation estimation. Spatial and temporal dependencies within the segmentation process are unified by a dynamic probabilistic. |

|

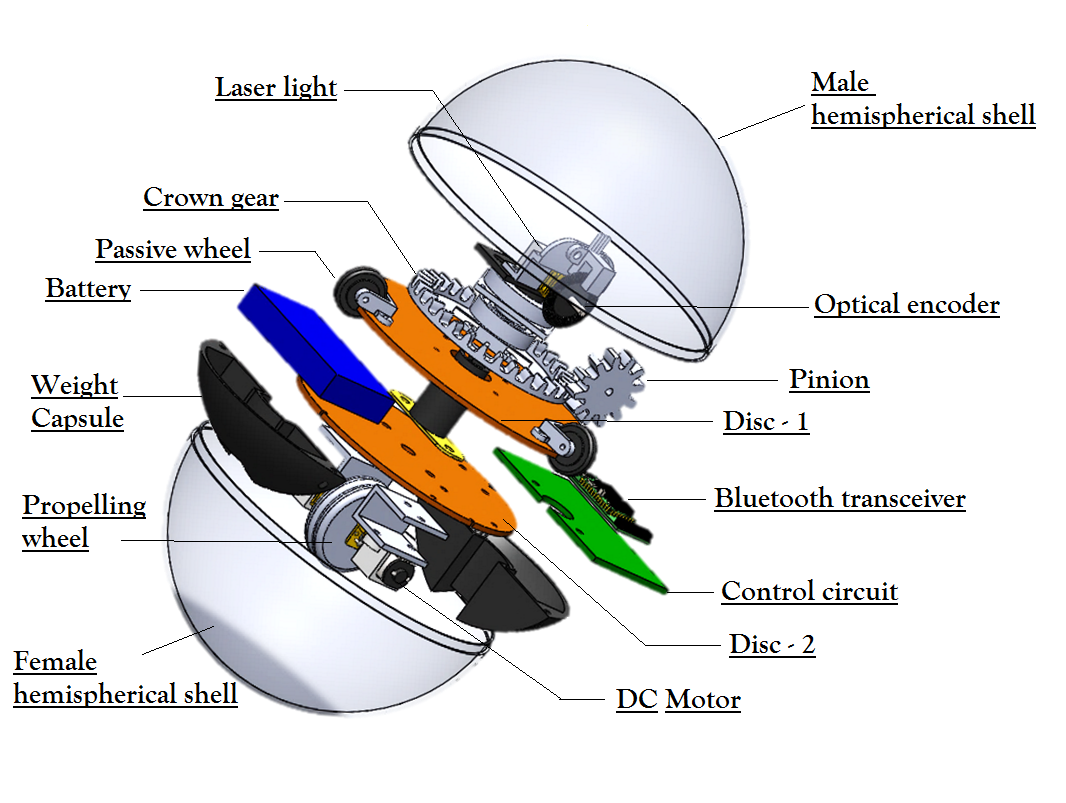

Akash Singh, Anshul Paigwar, Sai Teja Manchukanti, Prasad Vagdargi, Manish Maurya, Manish Saroya, Shital Chiddarwar IEEE International Conference on Mechatronics (ICM), 2017 International Conference on Reconfigurable Mechanisms and Robots (ReMAR), 2018 pdf / pdf-2 / video We presents a novel design of a Compliant Omni-directional snake robot (COSMOS) consisting of mechanically and software linked spherical robot modules. |

|

I have always been fascinated by robots; building them and bringing them to life is something I take pleasure in. List of my other projects can be found here. |

|

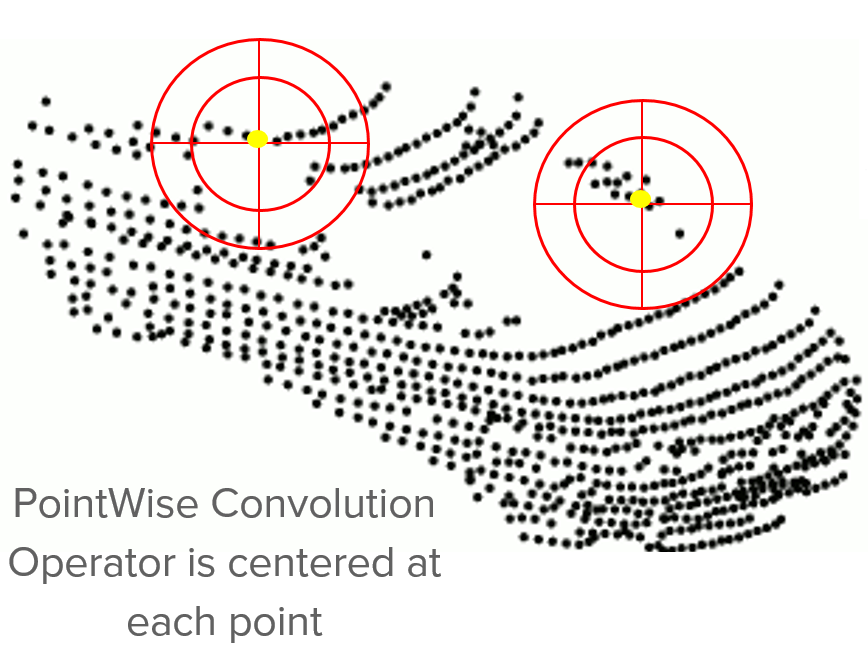

code / original paper / video A new pointwise convolution operator that can be applied at each point of a 3D point cloud. can yield competitive accuracy in both semantic segmentation and object recognition task. |

|

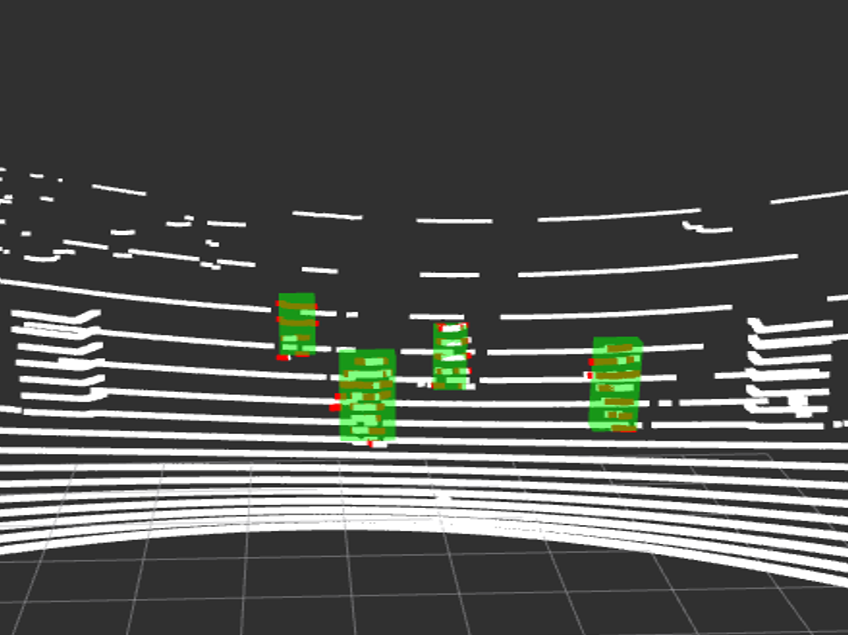

Anshul Paigwar, Zubin Priyansh, Pradyot KVN Internship at Hitech Robotics systems, Gurgaon, India code / documentation / video We proposed a new framework for real-time Detection and Tracking for Moving Objects (DATMO) in 3D point clouds. We tested our algorithm with the point cloud from Intel Realsense Camera and Velodyne-16 in indoor environment. |

|

Anshul Paigwar, Anthony Wong Internship at Institute of Infocom Research, Singapore video We fused GPS, odometry and IMU data for accurate localisation of the vehicle. We used Constant Heading and Velocity (CHCV) Vehicle Model for the dynamics of the vehicle. Finally the sensor fusion algorithm was tested on Toyota E-COMs experimental autonomous vehicle platform. |

|

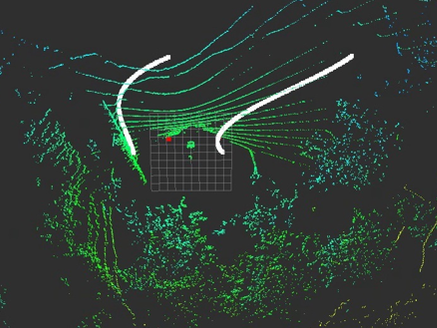

Anshul Paigwar, Anthony Wong Internship at Institute of Infocom Research, Singapore video For detection in 3D point cloud we first use RANSAC algorithm to find a dominant plane (road). Then use region growing algorithm and orientation of normal to road plane to detect the edges of the road. Finally we fit a cubic spline through the detected points. |

|

This website was forked from source code |